Adversarial Machine Learning Introduction

Adversarial Machine Learning (AML) is a discipline focused on studying vulnerabilities and security flaws in machine learning models with the aim of making them more secure. It seeks to understand how these models can be deceived and manipulated.

In recent years, machine learning in particular, and artificial intelligence in general, have experienced tremendous growth and are being integrated into critical decision-making processes. Some examples include medical diagnoses and future autonomous vehicles. For this reason, it is essential to minimize the possibility that such decisions could be manipulated by malicious actors. A striking example is the Optical Adversarial Attack (OPAD), in which researchers from Purdue University demonstrated that a “stop” sign could be interpreted as a speed limit sign:

https://arxiv.org/pdf/2108.06247

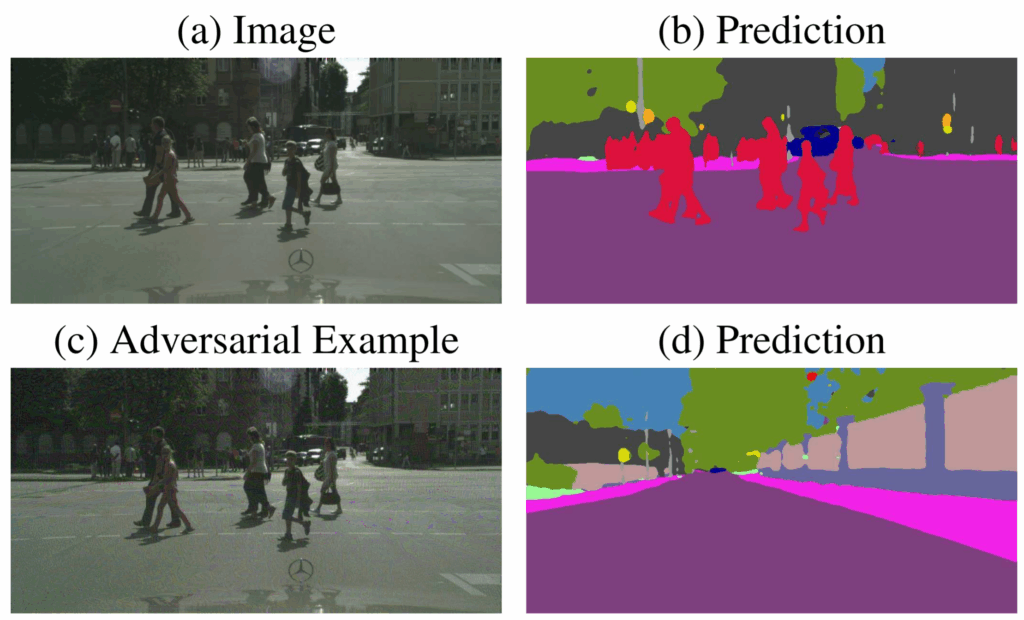

In 2017, researchers such as Jan Hendrik Metzen also demonstrated how, by adding noise, the model could fail to detect pedestrians and lead the car to believe the road was clear of obstacles:

Other attacks have also been described against models such as the well-known LLMs, which have made it possible to extract sensitive information or obtain responses that were not intended. Such is the concern about these attacks that even OWASP has published a Top 10 for LLMs since 2023, and it has been updated in 2025:

https://owasp.org/www-project-top-10-for-large-language-model-applications

What makes ML models particularly vulnerable?

- They rely on data: ML models wouldn’t exist without their training data, from which they learn. If this data is incorrect, biased, or manipulated, the model’s responses will be affected.

- They are complex and often opaque: Many ML models are like “black boxes,” making it difficult to understand how they make decisions.

- Transferability of attacks: Attacks crafted against one model can be effective against other similar models. https://llm-attacks.org/

- Lack of security and robustness by design: Security by design should be a fundamental principle in any system, including ML models. However, these models are typically optimized for accuracy rather than resisting attacks, so small perturbations in the input data can lead to significant changes in the output.

At Red Team AI Labs, we will study, research, and put into practice these attacks in controlled environments in order to develop effective defense strategies.

The following is not intended to be an exhaustive list of machine learning model attacks, but rather a few examples that we will be covering in upcoming posts:

- Model evasion attacks

- Model extraction attacks

- Model inversion

- Model poisoning

- Attacks specifically targeting LLMs

- Attacks on AI infrastructure

Leave a Reply